Rolling Into Intelligence: Understanding Gradient Descent Without the Headache

“If you can’t explain it simply, you don’t understand it well enough.” – Einstein

Let’s do exactly that explain Gradient Descent simply.What Is Gradient Descent?

Imagine you're blindfolded and dropped somewhere in the mountains. Your goal is to reach the lowest point in the entire landscape the valley. You can’t see anything, but you can feel the slope under your feet.

You take a step in the direction that feels downhill. Then you stop, feel again, and take another step. Over and over, you repeat this step by step until you reach the bottom.

This, in essence, is Gradient Descent.

In machine learning, this “valley” is the minimum value of a loss function a fancy term for how wrong your model is. Gradient Descent is how the model learns to reduce that error over time.

Why Is It Important?

Because without Gradient Descent (or similar optimizers), your machine learning model would just sit there clueless. It's like teaching a student without giving them feedback — no learning happens.

Gradient Descent allows your model to:

-

Learn from mistakes (loss values)

-

Adjust itself to improve predictions

-

Find the best set of weights or parameters

Breaking It Down Technically

Let’s look at the basics:

The Components

This formula helps the model take a small step towards better accuracy.

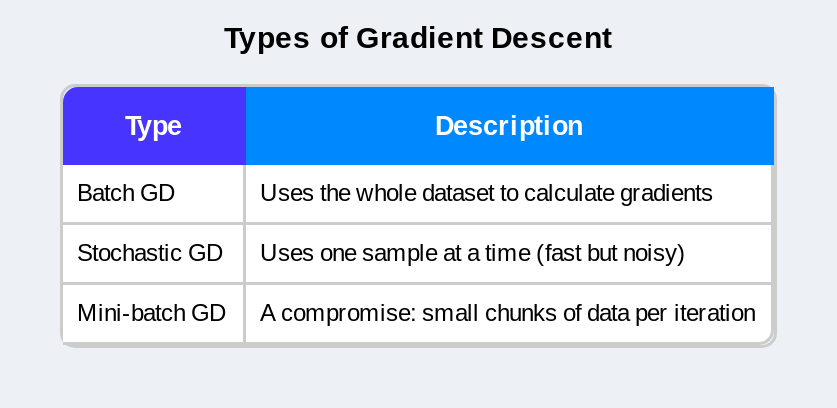

Types of Gradient Descent

Common Pitfalls

1. Overshooting the Minimum

A high learning rate might cause the model to skip past the lowest point.

Sometimes models stop at a “good enough” point rather than the best one.

Especially in deep networks, gradients can get too small to make progress.

Sometimes models stop at a “good enough” point rather than the best one.

3. Vanishing Gradients

Final Thoughts: It’s Not Just Math It’s Learning

Gradient Descent may be powered by equations, but it mimics something very human: trial and error. Every prediction, every correction, is part of the machine’s learning journey just like ours.

So the next time you train a model, remember: behind the scenes, it’s a blindfolded explorer feeling its way down a mountain, trying to understand the world a little better with each step.

Comments

Post a Comment